There’s Too Much Happening (Part 1)

The announcements are streaming by and the FOMO is real.

As I was doom scrolling through #neurips Twitter this week, I was reminded of that video of people trying to match Eliud Kipchoge’s marathon pace on a giant treadmill. Some fare better than others, but even the ones who aren’t flailing their arms wildly eventually stumble and roll off screen.

If you could measure the rate of news in miles per hour, the AI industry is pushing Kipchoge pace this week. Everyone reacts to change differently, and maybe you are someone who sees the firehose of announcements and thinks, “So fun! I can’t wait to spend my weekend hacking on all the cool new models!” If you are that person, I am happy for you. I am not that person. I am the person who sees the firehose of announcements and thinks, “Jesus, I am so behind. I’m never going to catch up.” Then I worry about that on a loop and do not touch the new models.

I can offer two pieces of advice to people who have a similar reaction. The first is physical activity. The best cure to AI-induced anxiety is to do cardio while listening to pop music. If you’re both anxious about AI and angry at the patriarchy, I suggest choosing a female artist. Olivia Rodrigo is a good place to start.

My second piece of advice is to take a thing that seems intimidating and learn about it. This can be really hard to do, particularly if you have perfectionist tendencies and like to understand things deeply. It’s not possible to understand everything deeply if things are racing by at 13 miles an hour, but that’s ok. The goal isn’t to be an expert, the goal is to learn. And maybe even have a little fun as you do it.

I assigned myself two learning projects this week. One is a hands-on-keyboard assignment, and the other was to find a tweet about AI that I didn’t understand and do enough research that I could explain it to you guys. So, here is my tweet explainer deliverable. Hopefully it provides some scaffolding for you to do your own learning projects.

Erica’s Tweet Report

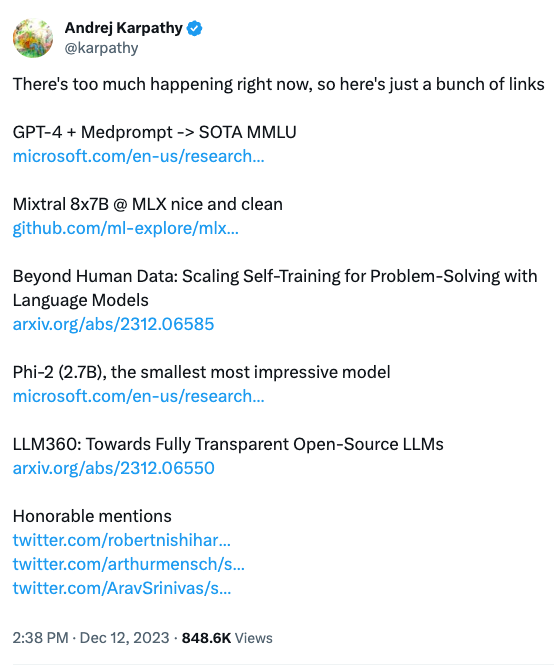

This week, Andrej Karpathy wrote, “There’s too much happening right now, so here’s just a bunch of links” proceed by eight links and nine acronyms. Inspired by the great Reply All segment Yes Yes No, I’m going to attempt to explain what the hell he’s talking about.

First, who is this guy? Andrej Karpathy is a research scientist who did pioneering work on using neural networks for computer vision and natural language processing. He did his PhD at Stanford with Fei-Fei Li, (yay women!) and was a founding member of OpenAI. If he feels overwhelmed by everything happening, then there’s a lot happening.

Why is there so much happening? The largest annual conference on AI (now, thankfully, called NeurIPS) is happening this week in New Orleans, and it’s producing a lot of headlines. Some are from academic papers, but many of these headlines are about companies who have timed announcements with the conference.

Ok, on to the links!

I’ll start with the acronyms.

- GPT-4 is the current best large language model (LLM) provided by OpenAI. It stands for “generative pre-trained transformer.”

- SOTA stands for “state of the art” and people unfortunately pronounce it like it’s a word, “So-Da” It means the best, the top, the number one.

- MMLU is an acronym for the Measuring Massive Multitask Language Understanding. It is a dataset of nearly 16k multiple choice questions that people use to evaluate the usefulness of large language models. The goal is to have the LLM guess the right answer given the question and a prompt. You can see examples of the questions here.

- Medprompt is a method proposed by researchers at Microsoft for creating GPT-4 prompts. In March, they released results showing that these prompting techniques improved the accuracy of GPT-4 on a subset of the MMLU dataset related to the medical domain. The prompting strategies are pretty straightforward, and you don’t need a background in AI to understand them.

What Microsoft announced this week was that they achieved the best scores reported to date (SOTA) on the entire MMLU dataset using GPT-4 and a small modification of the “medprompt” promoting strategy. And they open sourced the prompting strategy so you can use it too. 💥

Up next, we need to figure out what is "nice and clean"

The key to decoding this line is to know that "Mixtral" is related to Mistral AI, a 22-person Paris start-up founded seven months ago. They recently raised $400 million at a $2 billion valuation, or roughly $90 million per employee. They’re like a French OpenAI, but without the AI doomerism politics. Quite the opposite in fact: they publicly release their models and can download them for free and run them.

Mixtral 8x7B is the name of their new model. The “mix” is a reference to the neural network architecture they used, which is known as a “mixture of experts” (MoE). The 8x7B refers to the number of parameters, 7 billion per “expert” subnetwork. Why, then, does the model have 46.7B parameters instead of 56B parameters? Shared parameters! (This is as far down the rabbit hole that I got).

The top-level story is that everyone is racing to train better, smaller and faster LLMs. AI Twitter gets very excited when a new competitive model is released. The super secret GPT-4 model from OpenAI is still the one to beat, but the open source competitors are getting closer. Mistral claims Mixtral outperforms Meta’s largest model (Llama 2 70B) on “most benchmarks with 6x faster inference.” So the model is very good and very fast.

Finally, MLX is new open-source software from Apple that lets people run neural network models on Apple chips. There’s a lot to say about how hardware fits into the story of modern AI, but, in short, models have dependencies on certain brands of hardware. You cannot download the new, sparkly Mixtral model that was trained on NVIDIA GPUs and expect it to run on Apple’s bespoke CPU or GPU.

But now you can! Because “Mixtral 8x7B @ MLX” refers to the code that Apple has released to let you do that. 💥

We’re only a third of the way through the tweet, but I am 1,000 words in and my co-editor says I need to stop. Look for part II next week, and send me any tweets you want explained! I’m off to go for a run.

Comments

Sign in or become a Machines on Paper member to join the conversation.

Just enter your email below to get a log in link.